Approximately 45% of the global population is online, that’s a lot of potential customers. Of course, there are a lot of businesses online fighting for the attention of these customers.

There are various ways of getting the attention that businesses seek, from written content, to videos, and even to paid advertising. All are effective and all should be included in your efforts to attract new business.

source:invespcro.com

Of course, creating all the different content and managing them takes time and effort, that’s why you should use the services of an agency to help you create the right campaign and monitor it properly. For a reputable and well-established agency click here.

The problem with all of this is that there are always people looking to beat the system. This was how link stuffing came into being, a practice that really isn’t good for your business.

But, link stuffing and similar tactics did highlight the flaws with search engines and how listings were created. This has led to a steady improvement in the way content is dealt with, ensuring the list you see after entering a search is as relevant and practical as possible.

Of course, there are times when search engines change the criteria and algorithms, effectively undoing months or even years of SEO work. But, in general, the improvements are a good thing.

Introducing BERT

People and businesses continue to flood the internet, the result is there is almost too much information available. You’ve only got to type in sickness symptoms to find that you have any one of 100 diseases and in almost every case cancer is listed.

source:nationalpositions.com

In short, information is great but too much information can leave you worse off than none. Google is attempting to reduce the information overload by ensuring search results are as relevant as possible to the query.

The main issue is that of definition. Humans type in a variety of ways, but the computer only interprets the literal meaning of a phrase. For example, a human would write quarter to six to and mean that t was 15 minutes before the hour. They could also write nine to 6 and mean the tie between nine and six.

A computer will only see the ‘to’ in one way, effectively reducing both statements to a time. That’s going to change the results you see and their relevance.

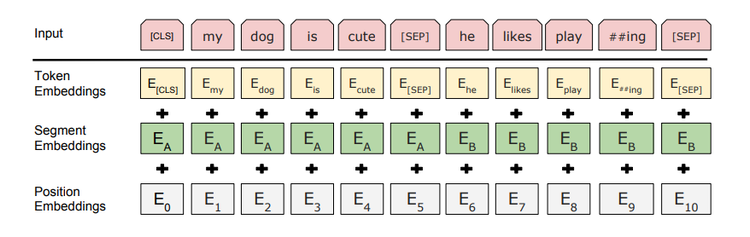

Bidirectional Encoder Representations from Transformers, (BERT) is a deep learning algorithm from Google. Its aim is to help a computer understand language in the same way that humans do. In effect, it’s merging a little artificial intelligence with existing algorithms to get a better result.

The system was first developed in 2018 and has been created as an open-sourced application. This means that anyone can use it to train their language processing systems and improve the search results generated. The best part is the fact that it is open-source, this allows any developer with a knowledge of machine learning algorithms to use, integrate, develop, and generally improve the model.

source:nationalpositions.com

In effect, Google is allowing others to develop it in order to save resources and get the very best possible product in the shortest space of time.

It’s worth noting that the theory behind this is not new, there are other companies creating Natural Language Processing (NLP) modules. But BERT is bidirectional and focuses on plain body text with pre-training on one body of text that exceeds 33 million words.

In short, it’s not just going to help computers understand human language better, it will improve the relevance of the search results you see and be used in a host of other applications. In fact, it is so likely to become the dominant program in NLP that asking about the understanding of BERT is the most frequently asked interview question for machine learning candidates.

How BERT Works

source:analyticsvidhya.com

BERT has been pre-programmed with over 33 million words and phrases with an emphasis on bidirectional. This means that it understands that ‘get well’ does not always mean the same as ‘get well’! the phrase can refer to a wish or hope that someone will experience improved health, t can also mean you’re looking for a water well.

The only way of knowing the difference is in the context of the search or surrounding questions. BERT analyses the whole sentence to determine the meaning of the two words accurately and thereby improves the results you see.

That’s a big difference, one-directional NLPs read the text from left to right or right to left, Google’s bidirectional approach means that it is read from both directions, allowing the computer to better understand the real meaning of the sentence.

It does this through machine learning and the fact that it has been preprogrammed to understand the most commonly used words and phrases. The key is to understand the contextual relationship between words, two words can mean different things depending on the sentence structure around them, BERT is an attempt to understand this context better and thereby improve the relevance of any results displayed.

It’s a complex bit of technology with a lot of potential for the future, especially as it’s open-sourced.

Does This Mean the RankBrain Algorithm Is No Longer Needed?

source:funzen.net

RankBrain was the first attempt by Google to create an artificial intelligence-based algorithm. It also used machine learning and pre-programmed words and phrases to improve the results you receive.

Google is not intending to replace this with BERT. Instead, BERT is seen as an enhancement to the way that RankBrain works, improving its understanding, especially on difficult to read phrases.

Of course, the ultimate aim is to have a complete understanding but the algorithms and AI is not yet in place to facilitate this. However, it is certain that just as this will improve the search engine function on Google, the algorithm and AI will also improve over time. Ultimately the computer should be able to understand what you are talking about through context, recent communications, and potential its ability to understand your thought process.

For now, BERT is a step forward in getting the best possible results for your search, but who knows where the future will lead us.